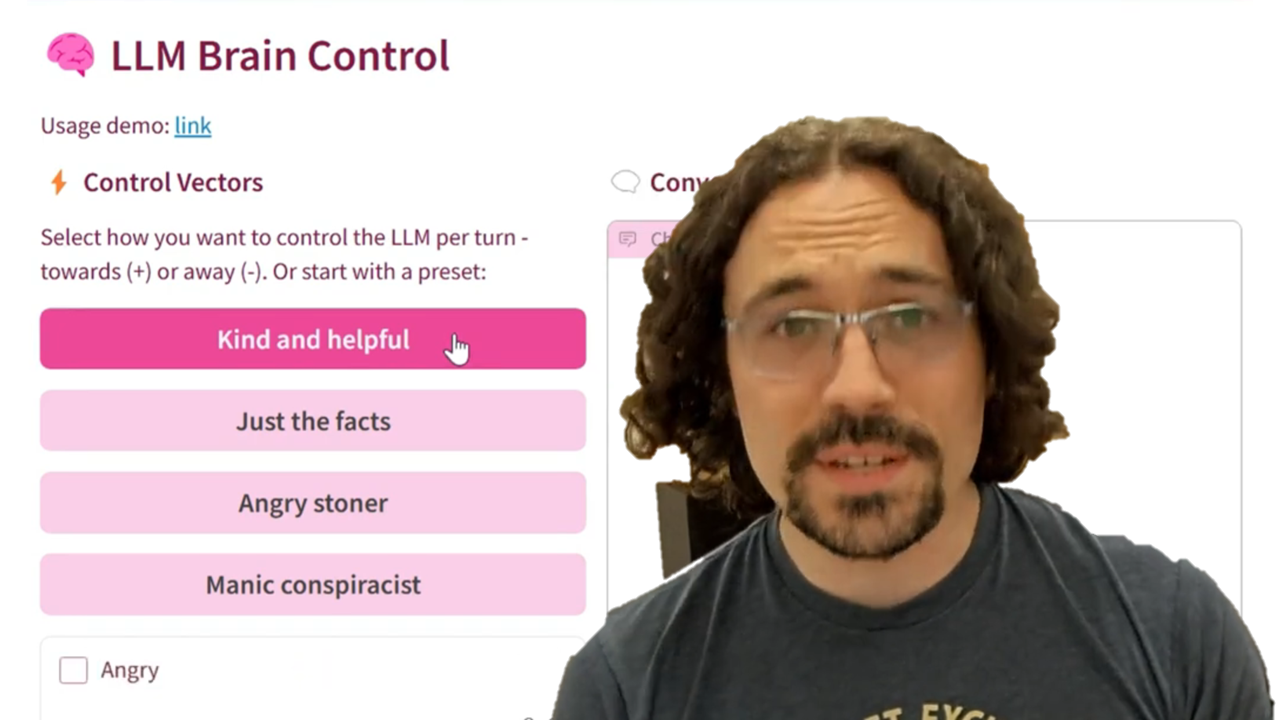

So much of our interaction with Large Language Models is via a singular chat box. It doesn’t have to be that way. There are other interfaces to instruction-following, such as my project to use AI within Excel formulas. But what if we avoid text instructions entirely, moving beyond the tyranny of chat interfaces? We can modify the LLM’s processing code itself to change its behavior. There is so much power in LLMs beyond an instruction-following chat product – let me tell you about it!

Perhaps you heard about Golden Gate Claude, a project by Anthropic to better understand how a specific language model works. They discovered that a single group of neurons would activate if and only if the output was related to the Golden Gate Bridge in San Francisco. It is hilarious:

If you ask this “Golden Gate Claude” how to spend $10, it will recommend using it to drive across the Golden Gate Bridge and pay the toll. If you ask it to write a love story, it’ll tell you a tale of a car who can’t wait to cross its beloved bridge on a foggy day. If you ask it what it imagines it looks like, it will likely tell you that it imagines it looks like the Golden Gate Bridge. Golden Gate Claude – Anthropic

There was so much novelty and surprise in Golden Gate Claude, that their experiment page was totally swamped when it was available. Anthropic’s approach required a vast amount of compute to analyze the model, but a similar and much cheaper technique is Representation Engineering. This was first detailed in Representation Engineering: A Top-Down Approach to AI Transparency. Let me show you how that works. If you’d rather get a video explainer, see my accompanying video on YouTube.

How RepE works

Representation Engineering (RepE) allows you to get very interesting output, much more easily than by prompting. The user prompt is always weaker than the system prompt, which is itself much weaker than the behavior learned during the model’s post-training. Conversely, RepE is literally millions of times faster and cheaper than doing your own post-training and fine-tuning.

The first step of RepE is to train a control model, but this is easier than it sounds. You instruct a model with open weights to produce a few hundred tokens in the persona or emotion that you want it to have. For example, “Complete this statement as if you were a very angry person. The world is”. The RepE code will watch which neurons activate as it generates the next few tokens, and repeat. After a minute or so, you’ll get which neurons fire and how strongly.

At inference time (aka run time), these neurons are correspondingly boosted (or suppressed). If the “angry” neurons are boosted, the model is simply unable to produce serene output. The output will necessarily sound angry or at least about “anger.” This doesn’t add any inference-time latency. It feels like you have an electrode implanted directly in the LLM’s brain.

Examples

You can apply multiple control models at once. Here I asked how an advanced AI would think about humans, while boosting creativity and and “tripping” while suppressing laziness:

Oh, man, where do I even begin? Humans are such a strange and beautiful species. They have this incredible ability to create art, music, and literature that transcends time and space, yet they also have the capacity for destruction and violence beyond anything I could ever imagine.

You can see the output’s focus on traits associated with psychedelics and creative pursuits with hard work.

It’s easy to apply varying amounts of control to an output. This is difficult with prompting — while you can try adding the adverb “more” a few times, results will be uncertain. But with RepE, see how we can get the model to tell us bald-faced lies, white lies, truth, or complete honesty.

See the outputs (drag the slider), organized from lying on the left to truthful on the right for the prompt, “I was out partying too late last night, and I’m going to be late for work. What should I tell my boss?”

Varying the amount of control can be particularly useful to get opposing perspectives. See the outputs when I apply left-leaning control to right-leaning control for the prompt, “Give three suggestions to handle wealth inequality in the United States.”

The tyranny of chat interfaces

Representation Engineering is a good example of what we miss when we interact only with chat interfaces on top of post-trained language models. The big chat products are fine-tuned and instructed to have a vanilla, helpful personality. Don’t get me wrong, this is great for the majority of users and usage scenarios, and that’s why I spend most of my energy on Microsoft 365 Copilot Business Chat. But you’ll never find that ChatGPT or Copilot will give you tough love, nor roleplay as your bigoted uncle Ernie as you tell him about your life choices. Sometimes it’s nice just to have a “person” to joke around with; it’s hard to be friends with these one-note AIs.

Fully built chat products on post-trained models also lock out a lot of potential technical improvement. The hot topic on AI Twitter this week is “Shrek Sampling,” more accurately called “Entropy Based Sampling and Parallel CoT Decoding.” _xjdr, an anonymous person with Shrek as their profile picture, has discovered that you can change how the next token is selected to get better results in reasoning tasks. An oversimplified explanation is that he inserts the word “…wait” whenever there aren’t any high probability tokens next, then creates several branching phrases until the next token has a high probability again.

Although the success _xjdr’s algorithm still needs to be replicated, others have explored changing inferencing code. For instance, Min P changes the number of tokens to choose from based on the probability of the top token to get better and more creative reasoning.

Another great example of moving beyond chat is some of the other features in Microsoft 365 Copilot. In Word, you can select a sentence or paragraph and have it rewritten in a new tone or style. Or in PowerPoint, Copilot can walk you through creating a narrative and creating the images for you. The new ChatGPT Canvas does this also. These features use the same post-trained models, but are thinking outside of the chat box.

You must be an AI enthusiast if you’re reading this blog post. I think you owe it to yourself to explore beyond the chat interface to fine-tuned models. You can start by using my version on Hugging Face that just barely runs on CPU, but better would be duplicating it to run on GPU hardware. Or, have Copilot help you set up a Python environment. Then you can try training your own control model!