Meanwhile, Excel is the worst interface to chat. From Nelly’s music Video, “Dilemma“. Image © Universal Motown Records

As PM for an AI chat product, I tell anyone that will listen that, “Chat is the worst interface.” If you need to get something done, would you rather click on a button or type out a few dozen buttons on a keyboard? Would you rather the computer do the work accurately, or sometimes maybe not do it at all? And should it take place nearly instantly, or do you want to wait for several seconds? Perhaps you’d like to remember everything that has happened so far without any visual reminders and cues? But then I continue, “Chat is the worst interface… except for everything else we have tried.”

There’s a reason why chat is the best interface to today’s generative AI and language models, even though it is slow, takes more effort, and makes mistakes. Chat is disambiguation.

Disambiguating tasks

The o1 model can now solve some of the world’s most difficult math problems. Even professional mathematicians look at the test questions outside their narrow sub-domain and start sweating. OpenAI and other AI labs focus on math problems specifically because they are some of the most difficult and self-contained problems that exist. Similarly, the Center for AI Safety has been paying $5,000 across all disciplines for problems that AI cannot solve. We must face up to the fact that today’s AI can solve any well-defined and self-contained tasks better than 99th percentile humans.

You might want to quickly disagree, but let me point out that those words “well-defined” and “self-contained” do a lot of heavy lifting in that statement. By “self-contained,” I mean that the task does not require any information that is not contained within the prompt. “Well-defined” means that there is no room for ambiguity in measuring success of the task. Math problems are great for this, with formal logic in both the question and the answer, making misunderstanding literally impossible.

The rest of our work is harder to define than mathematics. Poorly defined tasks leads to AI slop.

The cause of AI slop

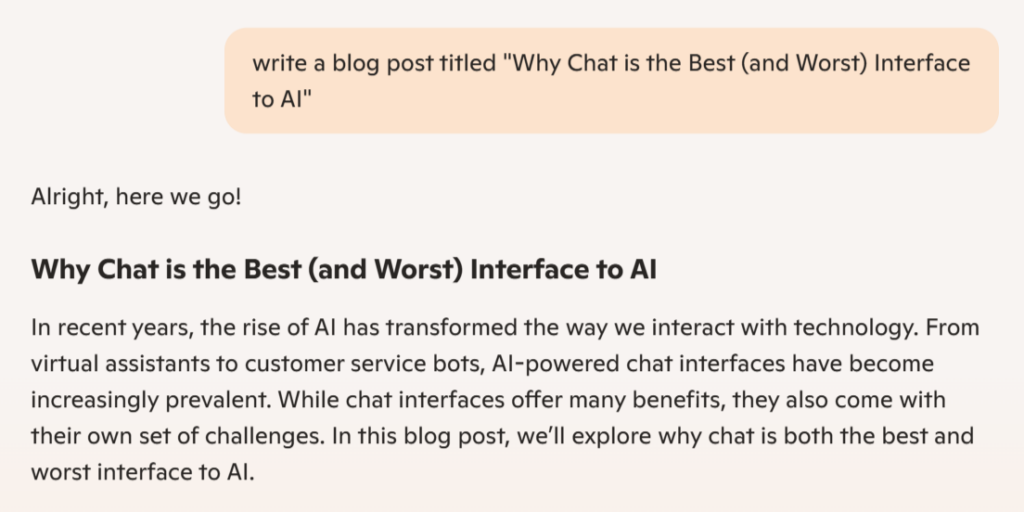

I asked Copilot to write a blog post titled “Why Chat is the Best (and Worst) Interface to AI”. If you’re a regular AI user, you’ll recognize the style of the extremely average opening paragraph:

And why does Copilot with a billion-dollar model give such an average paragraph? Because I asked for an average paragraph! I didn’t specify anything about how to write it, making an average result exactly the most accurate answer. LLMs are trained to give this kind of wordy, answered-but-not-answered result unless you say more about what you want. Not only is this the cause of AI slop, but it also causes the failure of most tasks. Carefully and sufficiently specifying tasks precisely — at least outside the realm of mathematics — is extremely difficult. You could learn effective prompt engineering, but the chat interface provides a faster and more natural way.

Chat is disambiguation

I gave Copilot some more instructions — things I liked and things I didn’t like. I made it a little more clear what argument I was making, in just a few turns and a few seconds. One of the paragraphs is not bad (if not exactly what I would write):

Despite these hiccups, chat interfaces have a powerful tool up their sleeve—disambiguation through conversation. By asking follow-up questions and engaging in a back-and-forth dialogue, AI can gradually narrow down what you actually need. This iterative process helps clear up ambiguities and leads to more accurate outcomes. It’s like having a helpful assistant who asks for more details when things aren’t clear, making sure they get it right in the end.

I could easily write a whole post this way, correcting and improving Copilot’s output through conversation. Conversation is a natural human skill. It’s our primary means of communication, built into us by genetics, formal schooling, and especially daily use. We’re good at it! When someone doesn’t understand us, we clarify. If we hear the wrong thing, we correct and say it more clearly.

Because so much of the training data is also conversation, LLMs are great at conversation too. Ironically, they are not as naturally good at instruction-following. In other words, they respond better to conversation than they do to carefully engineered prompts. You will get much more useful results by iterating through a conversation than with Markdown and that “100 best prompts cheat sheet.”

Despite all of the very real problems with chat as an interface, its usefulness to clearly define tasks through multiple turns of conversation is unmatched. It is, so far, the best interface to AI.