I recently got a complaint about a Copilot Agent that wasn’t working reliably. The developer designed a scenario where the user types in the name of a product, and the AI returns information from a document about that product in a structured report. They complained that the agent would only return some of the information, or it would vary the report format. It should be so easy! I felt bad, but I had to explain that this was a terrible use of AI. They could have implemented this same scenario in the 1960s!

There is a trap in building AI products that ensnares many: using AI when simpler software will do—better, faster, cheaper, and more reliably.

The wrong approaches

Tasks where computers are good but AI is not

I see a common pattern across many users that results in failure. They will instruct a chatbot to do something that they think is easy, because it always has been easy for computers. When the AI fails or produces slop, they give up. They were earnestly trying to understand what all the hype is about, and they had a bad initial experience.

Even if the user avoids an arithmetic or spelling task, many language tasks seem easy but are actually very hard for AI.

In Microsoft 365 Copilot, our first-time users often expect Generative AI to do the same things as previous AI features—but better. A perfect example is sorting email by importance. People naturally assume that this acclaimed AI should trivially handle such a scenario. Unfortunately, LLMs are not good at this task. An LLM can compare the characteristics of an email to spam or promotional email, but it doesn’t know anything about your personal priorities. It can’t order the emails by your importance criteria, which aren’t specified (this task also often suffers from needing long context windows, although this hurdle is improving).

I described the characteristics of difficult AI tasks in my last article. Commonly, AI may be missing the context it needs, the task may be ambiguous, or the task may require action beyond returning text.

Replacing a button with chat

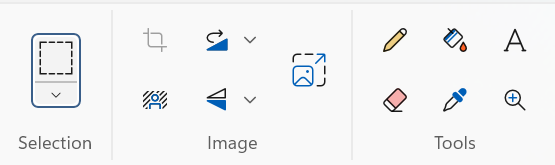

The more insidious problem is using a chat interface to an LLM where a clickable button would do. Software 101 is to understand what the user wants to do and make it as easy as possible. We interview, prototype, A/B test, analyze, and put a button in a menu on the screen. Our entire history of software from command line to GUI to API is set up to operate this way.

It’s hard to unlearn this, and it’s nearly impossible to overhaul the software. Instead, we add a chat box, hand an LLM some grounding data and the existing API, and set it loose on our users. This is a mistake: each function of the API is a well-researched user scenario, and it is already implemented. Instead of letting a user click on the button, you make them type dozens of buttons on their keyboard. And then the LLM takes several seconds and fails a double-digit percentage of the time anyway.

This is the mistake that the developer of the Copilot Agent was making. If you have a button, leave it alone. Use AI for transformational value instead.

Real product examples

If Large Language Models are bad for traditional computing tasks, what tasks are they good for? If we take a look at some successful and some terrible product features, we can identify some common factors:

- Writing notes and action items for a meeting requires summarizing a lot of language, it is tedious for experts, and the input varies significantly.

- “Enhancing” a LinkedIn post via 🪄 button is generating language, but the necessary context of the user’s personality and style is not available. Because it is built as a button, output is necessarily very consistent and sounds like it was written by AI.

- Although it may not be good for the world, using AI in the role of a friend / companion / therapist is extremely popular. This software users chat effectively and naturally to define the context and task.

- Writing pages from a few bullet points necessarily leads to AI slop, because the AI is inventing all of the context.

- Web research is an amazing scenario, as it’s hard to imagine more text to summarize than the entire public internet.1

- Siri and Alexa are frustrating because they have very limited functions and make you guess how to use them.

- Customer service and technical troubleshooting can work well, especially as reasoning models become widely available.

Use AI for transformational value

I can’t say that I understand every possible application of AI, but I do have some pointers. You must already know that AI excels at tasks that involve summarizing or generating language. I often think of this as “translating,” but not just between scenarios. You can also translate between a meeting transcript and a meeting summary or between a technical report and a fantasy novel!

Recently, AI has become excellent at reasoning. But reasoning is only useful when the details of the task change with each execution. Put another way, either the input or the output will be dramatically different for every execution. A chat interface helps the user define the task precisely.

AI can be good at those sorts of tasks, but the feature overall is only helpful if the user couldn’t have done it themselves. That means the task is very difficult for non-experts or time-consuming for humans.

If your project isn’t aligned with these characteristics, consider a simpler and more reliable approach than AI. Could it just be a UX button with good old fashioned programmatic output? No one ever wants to go Office Space on a button that works well.

Or better yet, can you tackle a much more difficult problem—one that is ambiguous, broad, and requires deep language understanding or reasoning?

- I wrote this sentence the day before OpenAI’s Deep Research announcement. It’s a good scenario! ↩︎