This article is solely my personal opinion, and does not represent my employer’s position.

What are AI Agents? It seems like every software company in the world is now describing their software as an agent. There’s a long tradition of attaching each new technology buzzword to a company’s software, with or without making any changes to it whatsoever. “Agent” seems to primarily be the replacement for “AI”, “Big Data,” “Analytics,” or “Responsive.”

But AI Agents are a real technology. Even if most are not, some products are being genuine when they use the term. This article lays out the functionalities and capabilities that make up AI Agents – and introduces a complexity hierarchy so that you can evaluate yourself software that claims to be agentic.

“Agent” Defined

The most accurate (and least precise) definition of an AI Agent is software that has agency to act on your behalf. That is, it can “decide” what to do. This comes from the real world: when you hire a real estate or talent agent, they do the work to execute your will. I don’t think this definition is helpful, because my furnace can “decide” to shut off the heater because it is warm enough, and the computer from WarGames can “decide” to start thermonuclear war. Most definitions, including Wikipedia’s, cover this entire gamut. Let’s be more precise.

When honest marketers describe their AI software as an Agent, they generally mean a Large Language Model (LLM) application that is more capable than early days of ChatGPT. The early ChatGPT interface already had several important features that would not be called argentic:

- A system prompt

- Conversation memory

- Fine-tuned model for instruction following

- File upload

- Basic voice input/output

This already rules out plenty of software that’s billed as an AI Agent. If the AI is applied via button-click, it has less agency than ChatGPT did in 2021. This doesn’t make it bad though! Teams Meeting Recap is one of my favorite AI features, despite being the antithesis of an agent.

Agentic Behavior

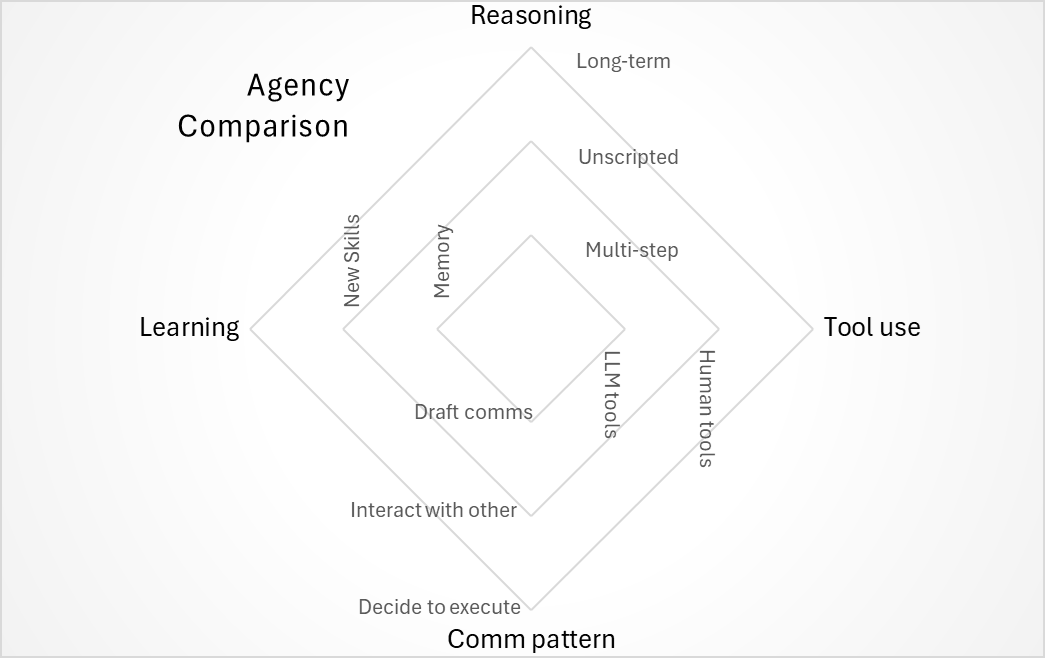

Moving on from what is not an agent, let’s discuss those capabilities that can be added to an LLM application that do increase agency. I’ll give a definition of each functionality, and then explain a complexity hierarchy.

- Multi-step processing: The baseline and what I would call core feature is making more than one call to the model. The simplest version of this is one step for reasoning and a second step for synthesis. This is done through a fixed workflow.

- Unscripted reasoning loops: A more complex version of multi-step reasoning, with an unbounded reasoning loop the LLM can “decide” to apply another round of reasoning. For instance, you’ll often see Copilot make new search requests when the first searches didn’t yield very good results.

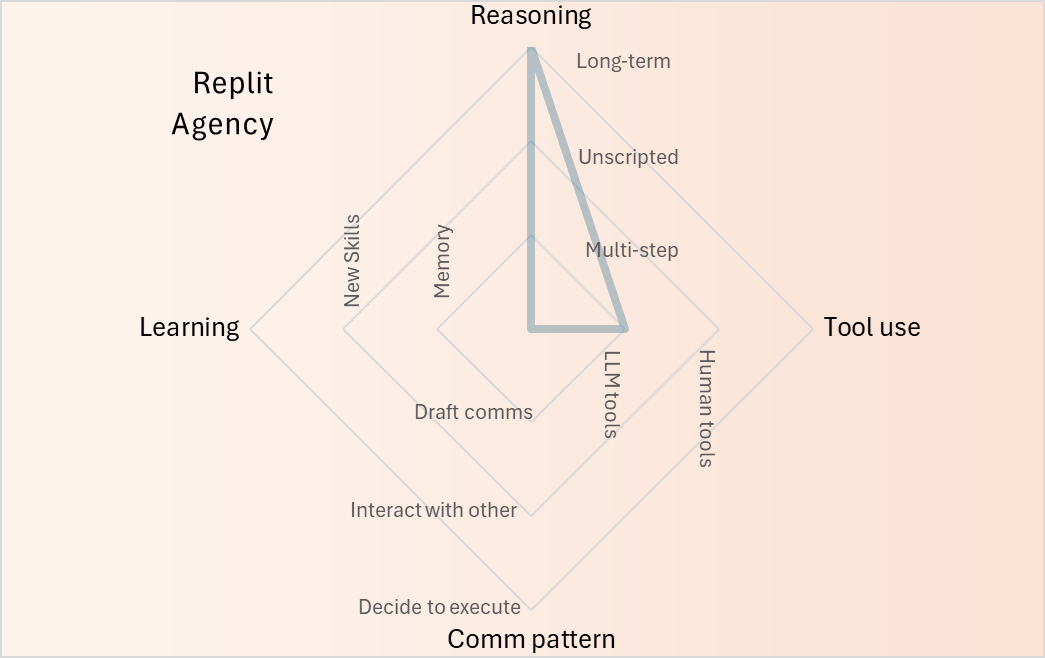

- Work long-term in the background: Even OpenAI o1 usually returns an answer while you watch. Researchers suspect that as we scale inference-time compute, more complex tasks can be completed. This will demand a different UX than synchronous chat. Replit is close to this.

- Using LLM tools: These are functions or capabilities expressly designed for use by LLMs. One example feature is the first plugins that OpenAI added to ChatGPT. This allows for the AI to take action outside of its chat box.

- Using human tools: A few AI programs can interact with software designed for humans, like an external website or the operating system on which they’re running. I wouldn’t include the software it is built into, as that integration code makes it more like using an LLM tool.

- Draft communications on the user’s behalf: When Copilot in Outlook suggests a draft email, they are doing some of your work to communicate with another human. If you were to immediately click send without reviewing it, you have given it quite a lot of agency!

- Interact with a different human or agent: Nearly all AI interacts only with the user, for good reason. An AI that can interact on your behalf with another person or that person’s agent would be very agentic. And, I’d argue, a bad idea if based on current technology.

- Choose when to execute: Generative AI today begins to process when the user clicks the submit button. In the future, we could imagine them deciding to execute on their own.

- Store memories: Both ChatGPT and Microsoft Copilot now remember certain facts that the user tells them. Whatever mechanism to store and retrieve memory is used, this is a simple form of improving with use.

- Learn new skills: As far as I know, the only generative AI that learns new abilities is in research labs. I expect future AI applications will be able to move beyond remembering facts and instead improve and grow in capabilities.

This is certain to be an incomplete list, but this is what I see on the horizon. And there are other useful AI capabilities that are not related to agents, such as a natural voice interface or RAG. “AI Agent” isn’t a synonym for “advanced”!

Evaluating Current AI’s Agency

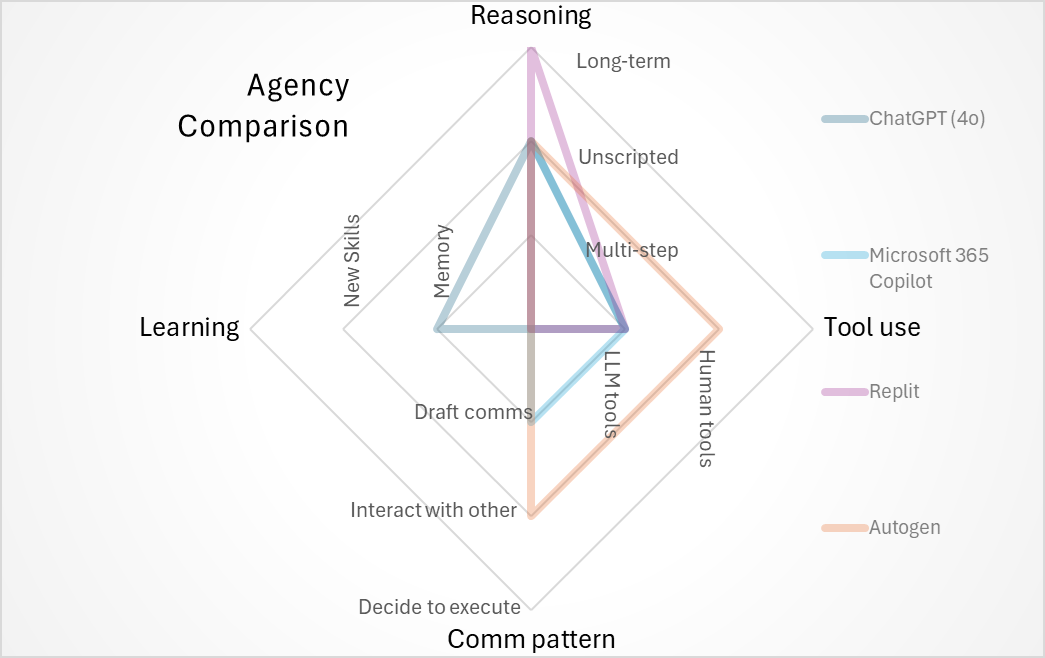

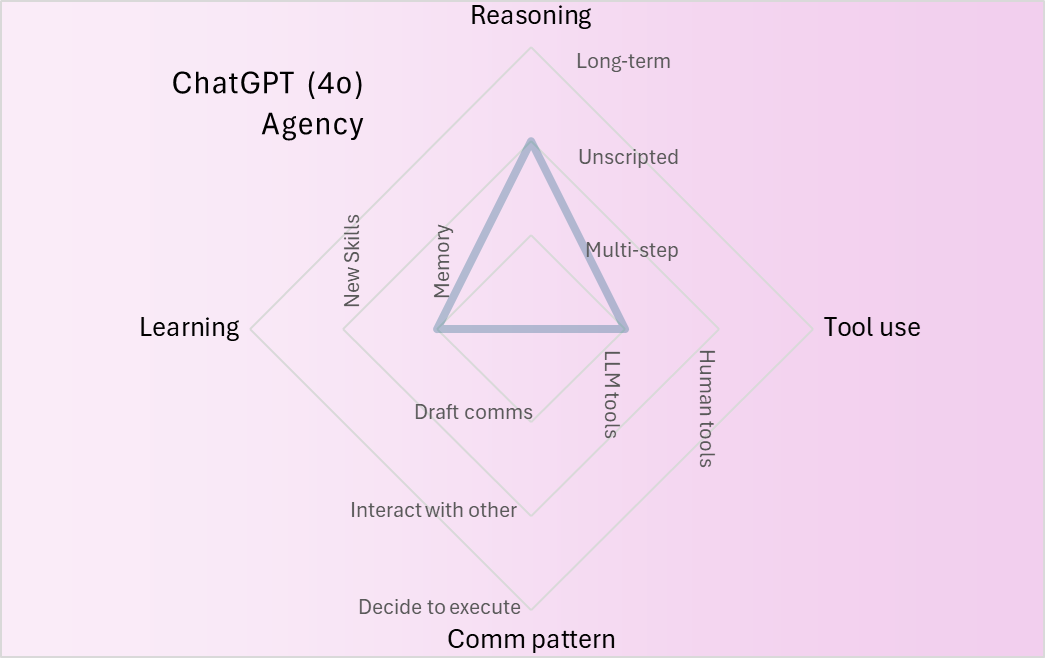

A program being agentic isn’t the same thing as being very good. Anthropic Claude Sonnet 3.5 is considered by many today to be the LLM product that produces the best responses, but it has few features of AI Agents. But if we arrange these capabilities across reasoning, tool-use, interaction, and learning and sort by difficulty to implement, we can get a fair picture of what AI Agents exist today.

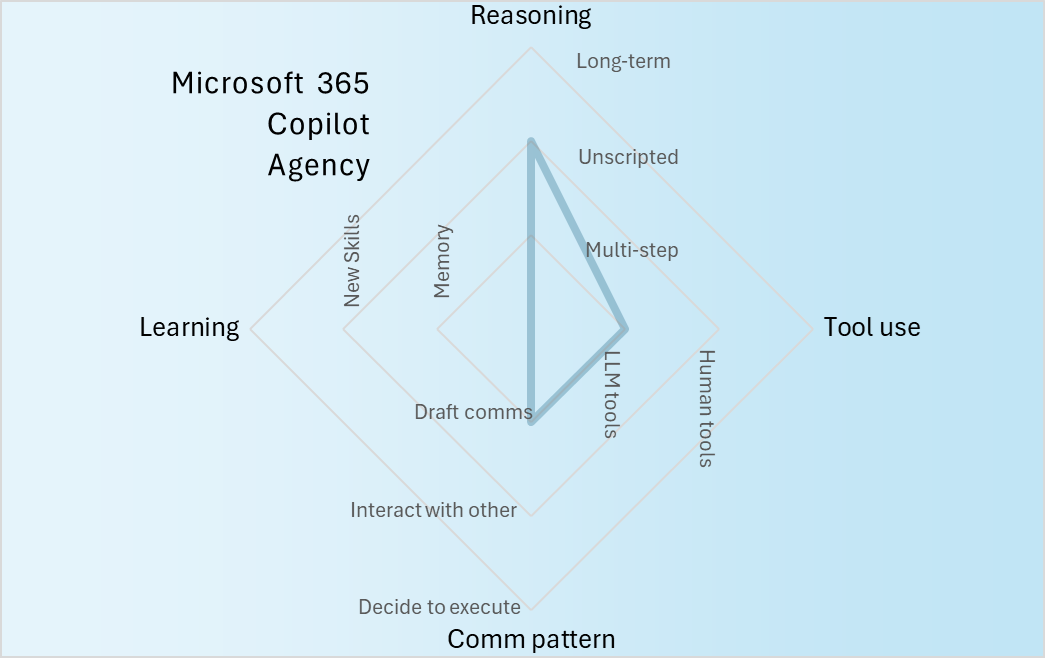

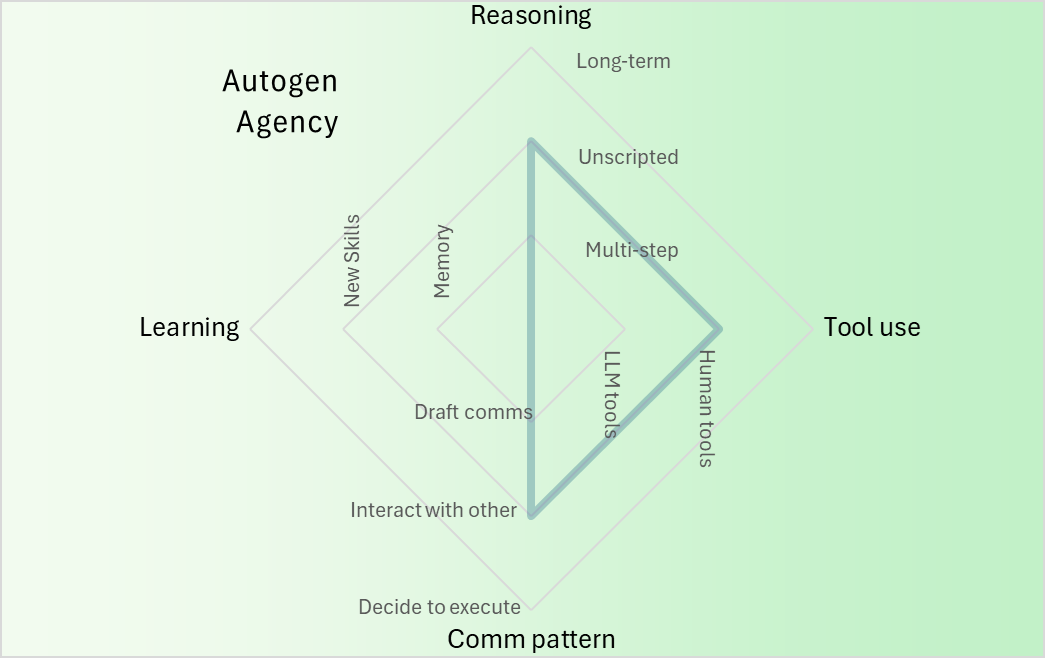

Here’s a workbook you can use for the chart. Let’s compare a few AI products’ agency (click to embiggen):

You can see that each company has a very different view of what an AI Agent is. I like the products I just described, but many other software companies are misusing the term “agent.” If an “agent” is a single call with a single system prompt to reprocess user data, the AI has no agency at all. It is too late to fix the term in our industry. However, now you have the tools to determine how agentic a product is, and in what dimensions.

You can read my older post, How to Improve Your LLM Application, for tips on adding agency to your intelligent application. Get out there and start building!