It should be no surprise that language models process and generate language. And therefore, jobs and tasks that involve language have the most to gain from the explosion of language models. But why does programming benefit so much more than writing marketing copy or magazine articles? There are a few reasons that programmers and programming are better suited to using AI, but the big reason is that language models are simply better at programming than writing in natural language.

Best suited for using AI

Whether fixing bugs, adding features to existing codebases, or developing a greenfield product, programmers understand a lot of text (from the code base or from the internet) and write a much smaller amount of code. At the highest level, programmers are summarizing a large amount of language in a task-oriented way to produce more text. This is exactly what language models are best at. Using a small amount of text to generate a lot of text usually yields boring or hallucinatory language. And summarizing text without a specific purpose in mind is a more basic capability. The day job of programmers is most closely aligned to what LLMs can do.

Programmers are more likely than average to find computer technology interesting – or else they may have found a different job! They’re also more likely to understand the basics of how AI works, which makes it easier to use. Also, many programmers are used to taking advantage of complicated computer software, like their development environment. Because they use complicated software day-in and day-out, they may have built skill and tolerance for software that is difficult to use or produces incorrect results.

But product marketers also summarize a large amount of language into a smaller amount of text with a purpose, and mechanical engineers are used to difficult software. Why does programming get accelerated more easily?

Why LLMs are better at programming than natural language

Quick primer on programming and AI

Software is made up of text written in a programming language. Like natural languages, programming languages have the equivalent of spelling, grammar, and semantics. And software has the equivalent of story structure. Data scientists realized several years ago that they could train language models on programming languages just like they do on English and other natural languages. But language models can result in better programming text than English text. How come?

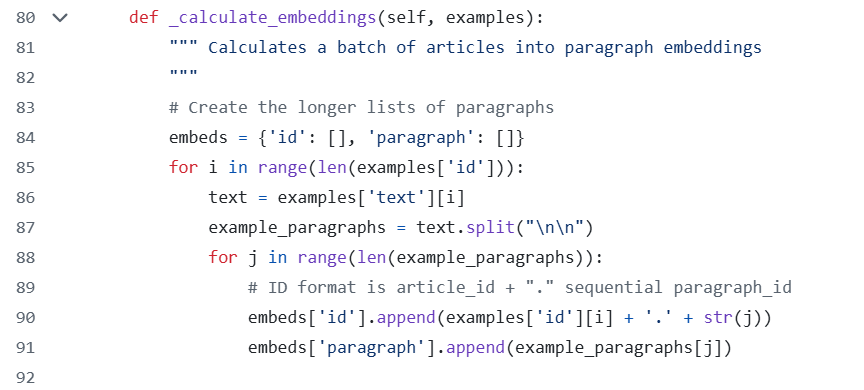

One reason that AI is often good at programming is that programming text is often commented. In the image above, the blue and grey text are an English description of the actual Python code. Having both allows for a technique called Supervised Learning. Until recently, most machine learning used supervised learning. But training language models (mostly) doesn’t. Most of the pre-training of a language model actually uses Unsupervised Learning. This doesn’t need descriptive tags to be included along with the data. As a result, it’s relatively easy to generate our own code data to pre-train models; this is not possible for natural language. But the real reason LLMs are better at programming so far is bigger.

Reinforcement Learning

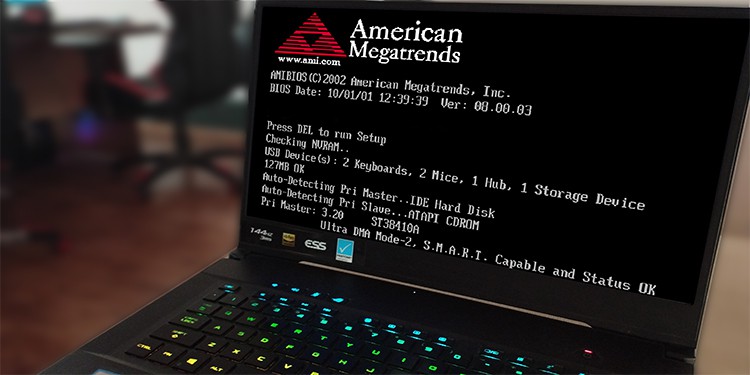

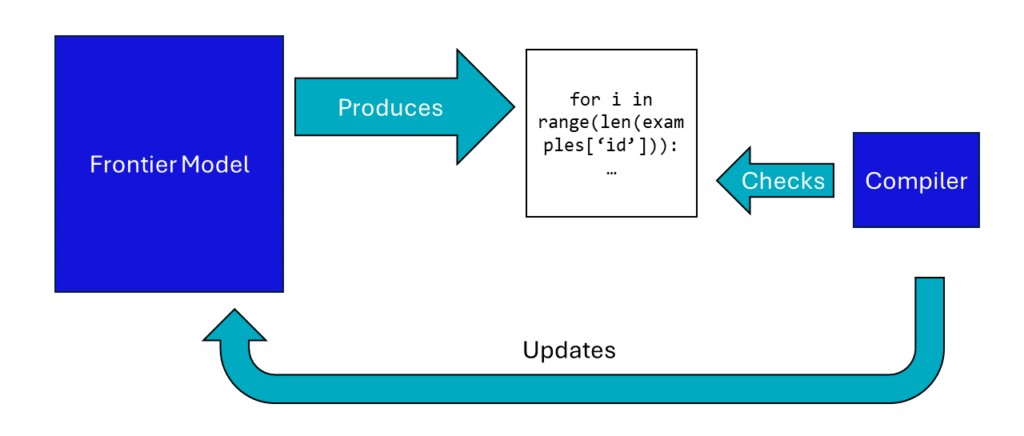

The real reason language models have gotten so much better at programming languages is because of what data scientists do in post-training. Programming is well-suited to another technique, Reinforcement Learning. In Reinforcement Learning, correct answers are rewarded and incorrect answers are punished. These rewards and punishments are updating the model to more frequently do the actions that result in correct answers.

When you have a bit of code, a much simpler piece of software can determine whether it has correct spelling, grammar, and semantics. This software isn’t AI at all, but a compiler or simple execution environment. Scientists have the language model they are training produce billions of snippets of code, and other software can decide whether the answers are correct and should be rewarded. The snippets can get longer until they are entire projects. The only limit we’ve found for how good this simple feedback system can get is having enough datacenter hardware to run it.

Contrast this with writing English text. Spelling is simple to check with other software, and grammar can be verified with simpler AI. But we don’t have software that can determine whether a poem is beautiful or an email subject will encourage someone to buy the product. We need humans to make this determination, and humans do not scale as well as software. Instead of billions of examples, scientists may only use thousands of English examples to continue training the model. As an aside, a larger model can make this determination for a smaller model; we call this a teacher model. But that won’t help us make a bigger model!

What roles might LLMs accelerate next?

Other jobs and tasks deal with a lot of language. If you’re reading this blog, you probably do too and will get plenty of benefit from LLMs. But not as many jobs deal with verifiable language, where simple software can easily determine correctness or accuracy. The tasks that will be accelerated the most in the near term from language models are those where there is a high-bandwidth feedback loop. I would first look at roles adjacent to programming, like Computer Aided Design, where a simulator may be able to determine whether a generated bridge is structurally sound. But can we be more creative in what we consider a feedback loop?

I wonder whether the tasks where we have been able to use AI from supervised learning may also work well to reinforce a language model. We have billions of examples of search results and social media posts that users clicked on or skipped. Could these data train a model that already writes in natural language to write even better titles and excerpts for advertisers and social media managers? Are sales numbers of novels useful to suggest more beautiful prose for authors? This is all speculation, but I suspect this pattern will continue: AI will work best on tasks where a lot of verifiable data are available. Do you find yourself using AI for tasks where clear feedback signals exist?

p.s. I didn’t strive to cover all nuances in my description of training. If you want more precise information on practical LLM training, the best I’ve found is Meta’s Llama 3 Herd of Models paper. Section 4.3.1 describes how they improved programming performance in post-training.