I’m sure you’ve heard of Google NotebookLM’s Audio Overview feature to produce podcast-like audio based on your files. It was the talk of the internet last week. A friend suggested pointing it at my LinkedIn profile, and the audio it produced was astounding. And flattering! I thought it would be fun to add lipsync video. It was fun, but more than that, it was educational. Let’s talk about how I made this video, what I learned about AI while doing it, and how you can avoid AI from continuing to dig itself a deeper hole.

How the videocast works

If you’re just looking for the code, head to my GitHub repo for Hallo-There. Read on to learn how it works, and how to better work with AI.

The audio

Google hasn’t talked about how they did this, but the basics are obvious. Gemini’s strength over its competitors right now is handling very long context windows during LLM generation, which is important because you can only use one call to produce a cohesive 10-minute story, which means all the content needs to be available. It seems likely that NotebookLM loads detailed summaries of all the source documents into the context of one call to Gemini. The way I’d do it is to produce a narrative outline with citations, then go back through and produce a script for each part of the outline. They must generate the audio with a process similar to others, a multi-modal model.

Because the podcast has two people talking, I needed to get which person was talking when. It wouldn’t work to have both people’s lips moving the entire video. Copilot Pro helped me find what it’s called to determine which person in an audio is talking: diariazation. And fortunately, there’s a great diariazation model on Huggingface: pyannote/speaker-diarization-3.1. This takes one input audio file and produces one output text file, looking like this:

SPEAKER audio 1 0.031 2.396 <NA> <NA> SPEAKER_01 <NA> <NA>

SPEAKER audio 1 2.410 2.295 <NA> <NA> SPEAKER_00 <NA> <NA>

SPEAKER audio 1 4.638 0.996 <NA> <NA> SPEAKER_01 <NA> <NA>You can see SPEAKER_00 and SPEAKER_01, along with the start and end times of their speaking. Then I was ready to tackle making the video!

The video pieces

My first step was to generate a few images of two different speakers. I didn’t want just one image, because I know from experience that attempting to animate one static image for more than a few seconds leads quickly to chaos, or at least being uncanny. This was a good opportunity to install Flux, the best open-weights image generation model today. I generated one image of a person that I liked, had ChatGPT describe it in extreme detail, then generated several images from this new description. I picked the four that looked the most alike.

There are a few paid services (HeyGen, Hydra, or LiveImageAI) and open source tools to go from static image and audio to lipsynced video. Much of the point is learning myself, and so I wanted open source. I had used SadTalker before, but since SadTalker, Hallo was released with better quality. I found that others are in progress, although many may end up as proprietary systems. Hallo’s GitHub repo comes with an inference script to produce one video clip of one speaker. But this audio is two speakers, and I would need hundreds of clips!

This was a perfect time to try out OpenAI’s o1 model to write a lot of code. I gave it Hallo’s calling instructions, the paths to the generated images, and it wrote 200 lines of Python code that worked for me, first try. This was extremely impressive! I had it make a few changes (readers, take note) and then set an A40 GPU rented from RunPod loose on it; 24 hours and $15 later I had 200 chunks of video. But I was about to be a lot less impressed with o1.

Combining video pieces

With the success of generate_videos.py, surely combine_videos.py wouldn’t be so hard? o1-preview took my instructions pretty well, and produced another few hundred lines of working Python code. I could tell the resulting output video was close, but the video kept flashing to black. ChatGPT could not figure out why, and kept making useless suggestions. I had to dig into the code myself to figure out that it was applying fade transitions, and with such short videos, it was usually fading. It was also trying to load both speakers when there should only be one. This took a couple hours to work out.

The results were still mediocre. After a few clips, the video and audio would get desynchronized. Instead of trying to continue debugging, I asked ChatGPT to refactor the script from combining the available videos to using the diariazation data to look for the right video chunks. This was a disaster. After another few hours, I pointed out the bugs to ChatGPT. It failed to make at least one of the fixes, the video was black most of the time, and synchronization was still pretty bad. I went in with a few more hours to fix the remaining bugs myself. The output was workable, but still not very good.

I took a break for a week. Instead of continuing the conversation thread with ChatGPT and o1-preview, I started a new one. I made sure to include the specification changes I had made mid-conversation. This time, the first attempt with 140 lines was almost perfect. The audio was poor because it had used the video chunks’ audio instead of the original audio file. I swapped that out myself with a couple lines, and got an excellent result. Why did it work this time? Well, that’s because of how LLMs, and particularly chat interfaces to LLMs, work.

Digging oneself deeper

My conversation thread in ChatGPT ended up being 92 pages long – maybe 40k tokens. It was getting harder and harder to work with ChatGPT to fix bugs as the conversation went on. LLMs do get worse when they need to work with a lot of tokens in their context window, but I don’t think that was the main issue. It is more that once an AI starts going down a path, it had a hard time deviating from it. Once it has a hole, it will keep digging itself deeper.

Digging itself deeper means that when I made a requirements change, o1 made minimal updates to the code it had already given me. It wouldn’t refactor the code structure to work better. This is somewhat like any software engineer working with a deadline, but AI has a worse time with this than humans. The original requirement is still in the context window affecting how it thinks about the changed requirement. This is OK to simply change a variable, but isn’t at all successful when you ask it to think about the problem in a different way.

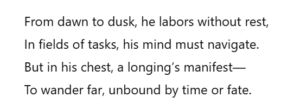

This doesn’t only apply to programming. Here’s a quick test you can do: ask ChatGPT or Copilot to “write a sonnet about a bee named Archibald.” And then tell it “make Archibald a human instead.” Here’s the result when I tried it. The second sonnet gives human Archibald the same qualities and desires as the bee! He’s hard working and seeks to shirk his duty and explore. The conversation history strongly affects the output, even if the request is unrelated.

As conversation lengths go on, AI gets in a rut. It will be less creative, more repetitive, and overall just less functional. Often the solution to the problem is to start over with a new conversation. Bring only the minimum context necessary and rewrite the task.