I’m sure you’ve seen the Ghibli-style images generated with ChatGPT all over the internet. They are neat, but there are so many other incredible capabilities unlocked by this release. Transformer models understand images in a new way that avoids many of the traps of diffusion models In this article I compare with the previous state-of-the-art, investigate how this new model works, and show some new types of things we can do now.

A Brief History of Diffusers

I have been playing with diffusion models — the model architecture that has been behind all the generative art (before last week) since Dall-e was released in early 2021. I’ve never used any images commercially or used them instead of paying a human artist. My interest is more in the technology of diffusion. It’s a fun hobby!

What I find most interesting is how diffusers are trained. Start from a labeled image, then add random color pixels to it until the image is unrecognizable. Once you’ve done that a billion times, the model has learned how to most efficiently remove the information in an image by covering it with “noise.” Because that’s represented in math, now you can do the math backwards. Start from random pixels, and remove the noise by correcting each pixels until the image matches what that image’s label (prompt) would have been if it existed in the training data. Wild!

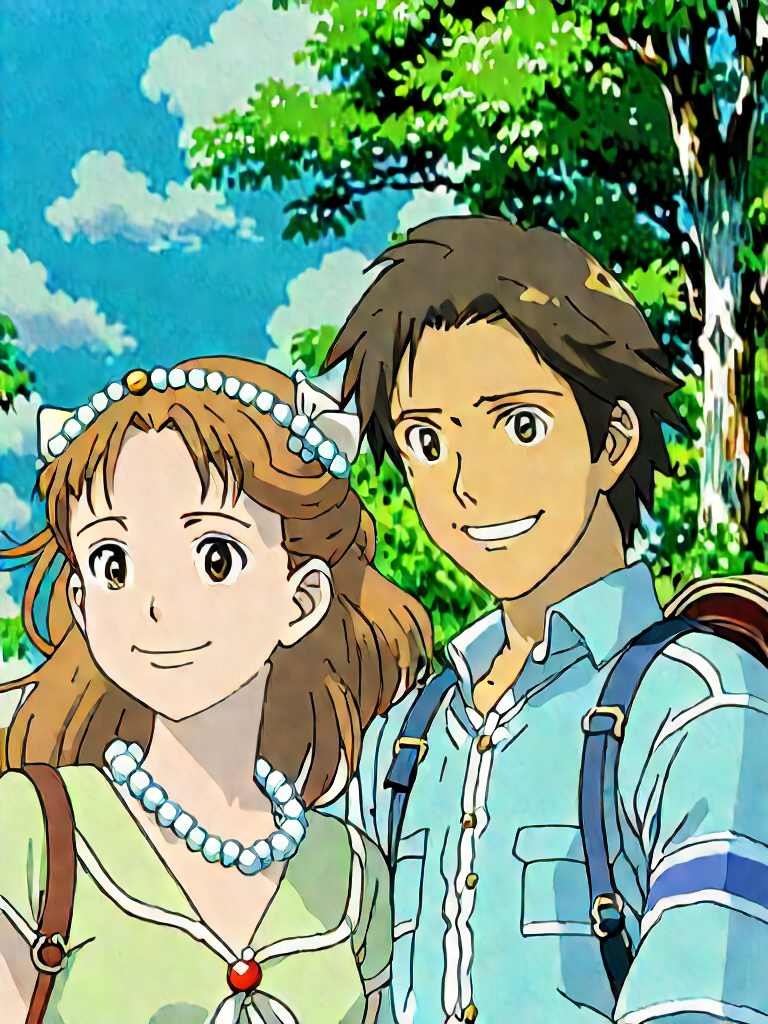

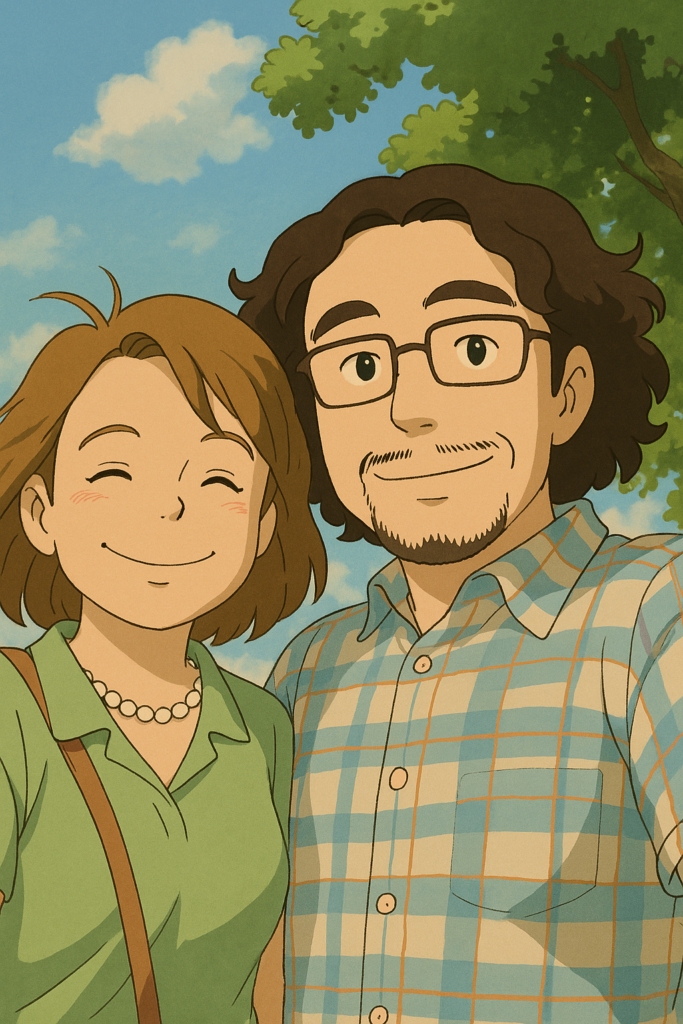

I spent a couple hours using previous diffusion tools to Ghiblify this image of my wife and I.

We’ve had remarkable progress since the first release of Dall-e! The models have gotten much better, and tools have too.

- Dall-e mini (renamed Craiyon) was the original model made easily available on the internet, soon after OpenAI released Dall-E. It was the first image generator that became popular; suddenly you could immediately get any image you could think of! It was famously terrible at producing faces.

- Stable Diffusion 1.5 (SD 1.5) from Stability AI was perhaps the first “good” diffusion model that anyone could run locally. It’s great at faces, and it’s terrible at eyes and hands. SD 1.5 is the main reason I purchased a graphics card with 12 GB of VRAM. It was made available in an ugly interface from Automatic1111, and nerds all over the world rejoiced.

- Control nets are like the constrained grammars to make LLMs output valid JSON. They force the output to match a structured framework. For the two control net images, I used OpenPose, which maps out the “skeleton” of any human shapes and make sure those skeleton poses persist in the result. Those “hidden image” pictures were made with a control net.

- Deliberate v2 is one of many, many fine-tunes of Stable Diffusion 1.5. Because of how small the base model is, consumer hardware is powerful enough to make new versions of it. SD 1.5 remains popular to this day due to the thousands of fine-tunes it has, as well as how quickly it can run.

- Regional prompting allows you to use different prompts for different parts of the image. This was a big help to avoid putting a necklace on me, although you can see my wife still got eyeglasses.

- Stable Diffusion XL (SDXL) was the first popular model to natively support images larger than 512×512 images. Up until this time, most diffusion images were square. Larger images yielded duplication of the subject, while narrower images caused artifacts.

- Image-to-image (i2i) is contrasted with text-to-image (t2i). In t2i, the image begins as completely random color pixels. In i2i, only about 75% of the pixels are random, the rest come from the source image. When done carefully, much of the structure of the original is still there. You can see the leaves on the tree match up closely with the source image.

- Flux.1 Dev is the current king of open weights models. It allows for composition (arrangement) of multiple subjects, a moderate amount of text, and it has much higher quality overall. This is when we were able to get past “AI generated smell.” But even on my beefy graphics card, a reduced-size version still takes 90 seconds for one image. Automatic1111 was slow to support Flux, and so much of the community moved to Comfy, a node-based workflow designing tool.

- Low Rank Adaptation (LoRA) is popular to add deep knowledge of subjects or styles to the base model. Training a LoRA is much cheaper for large models like Flux and results in much smaller files. Folks that are more into image generation than me may have hundreds of LoRAs that they combine for just the right output.

And now even Flux seems terrible in comparison to GPT-4o’s native image generation. It is not a diffuser; let’s explore how it works.

Transformers Generating Images

GPT-4o is a multi-modal transformer model. It is the default text model behind ChatGPT, and it’s also what powers Advanced Voice Mode. It could already understand images, and last week OpenAI opened up the capability to generate images. Like diffusers, generating an image is just a matter of understanding an image in reverse. But no one had been successful with a practical implementation.

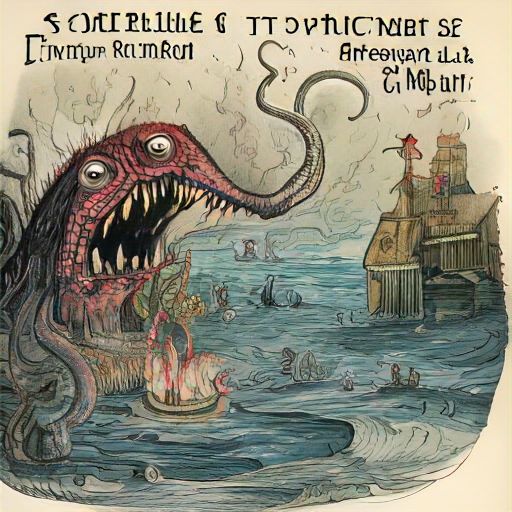

Researchers at Meta developed the Cham3leon transformer with image capabilities last May. I used a fine-tune of it called Lumina-mGPT to create this monstrosity, and it took hours:

Diffusers remove noise from all parts of the image simultaneously. GPT-4o instead produces one 8×8 block of pixels at a time: left-to-right, then top-to-bottom. You can think of it like it has turned the image into a page of text. Each bit of the image depends on the bits of the image already produced, just how LLMs produce words that depend on the previous words in the conversation. Both bits of images and chunks of words are tokens to the model, and the same transformer works across them both (as well as audio).

Occam’s Razor suggests that we should believe the text on the whiteboard in OpenAI’s first promotional image that describes the process. The transformer produces a low-resolution version of the image. Then a diffusion model takes over to improve the quality and scale it up. We don’t know yet if the diffuser applies on each block of pixels or only the entire image. Some folks have inspected the images sent by ChatGPT to the local browser and found that the pixels at the top of the image change a bit as the image completes. One last thing we’ve discovered is that it handles transparency by generating an image of the traditional black & white checkerboard. A background-removal tool is run afterwards.

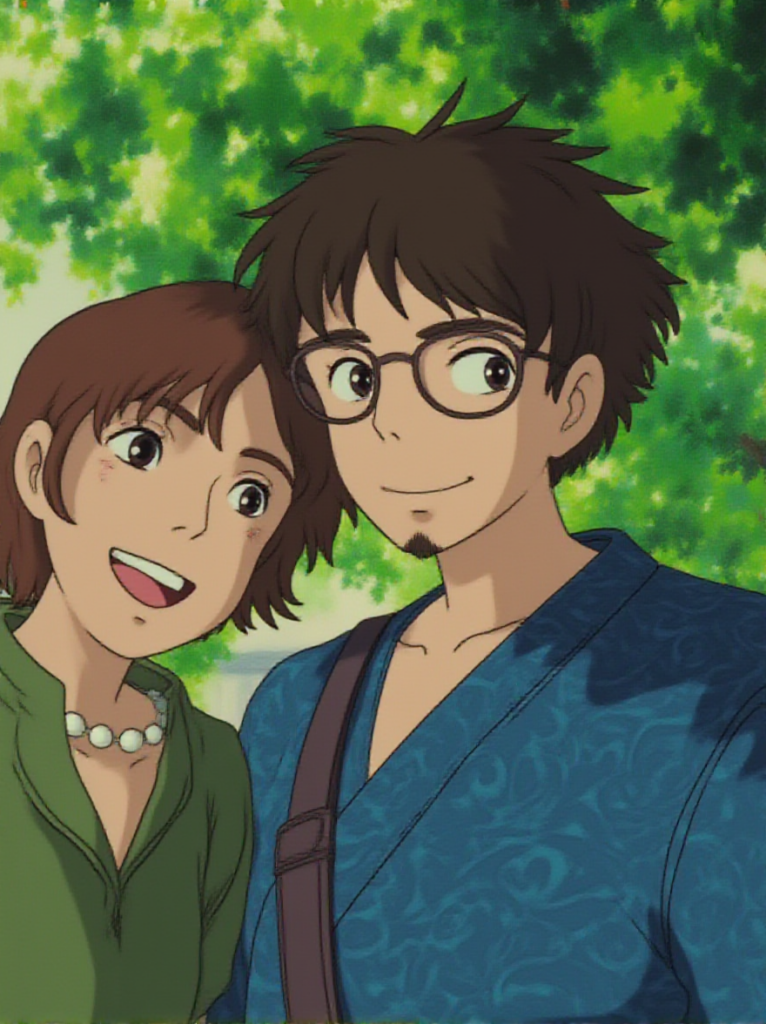

This was my first try with GPT-4o. Instead of hours of work and dozens of failures, I got nearly perfect results right away. It’s got the correct accessories on each of us, managed to get my beard approximately correct, and even got the pattern of my shirt! You can tell it “understands” what the image is in a way that is deeper than what the pixels are. Maybe someone more skilled with diffusion tools could match this, but it would take them all day.

This is a watershed moment for generative AI, and not only because image generation is another step-function better.

New Possibilities

Treating chunks of images like chunks of text marks a significant breakthrough. So much more is possible than the text-to-image and image-to-image that we were using before. Just as OpenAI found emergent behavior when they scaled up GPT to 3.5, we are seeing the same with GPT-4o image generation. The model behaves as though it understands the meaning of the things in the image and their relationships. In the picture of my wife and I, you can see it is thematically correct and gets all the details. However, it has moved things around a little bit; it is not matching pixels!

Diffusers are stuck relating pixels to other pixels. In the training data, a woman and a mirror always does the same reflection. A diffuser could never do anything else. GPT-4o moves out of this trap to create entirely novel scenes like this one in the header image.

I don’t want to over-hype, so I’ll stick to publicly demonstrated scenarios that were fully impossible before:

- GPT-4o can use the same characters in multiple images. If you generate the start and end frame of something you want to animate, you can put it into Sora, Runway, or Kling to animate a scene. Go ahead and choose any animation style that you can think of.

- Similarly, GPT-4o can generate a product image in several contexts. With some animation and voice-over, you have a commercial.

- It’s now easy to see what your house would look like with built-in shelves or how a different coffee table would look with your sofa.

- It is pretty good with text, which means you can get entire infographics. Perhaps my Unprompting Notecard could be generated now instead of taking hours in Canva?

- You can quickly get software interface mock-ups, from however you want to describe it. Natural language of course, but why not give it JSON of your data shape and ask it to make an edit interface? Also include your existing interface so it can match your style. And then have it write the interface code!

Be warned, deepfakes are now also trivial. Any person can be put into any existing image or new image. Or you could send your boss a picture of a flat tire on your car, with a receipt of the repair bill. The unscrupulous will remove watermarks from stock images. These tasks were possible before, but now they are trivial. Don’t trust any picture.

It feels like anything that you want to do visually is now possible. Is that true?

What Remains to Improve

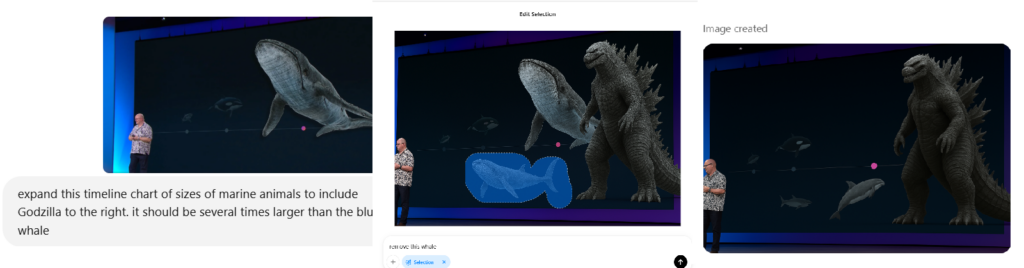

There are some things that GPT-4o cannot do yet. Images with a lot of text will have artifacts. Resolution is limited. There are still places where it cannot go against its training data; I saw someone generate an upside-down poster but some of letters didn’t flip and the words were reversed instead. Perhaps we need a Godzilla-sized model?

You can see in these screenshots that there is a selection tool to tell the model what to focus on, although I’m not sure it works yet. I expect GPT-4o is going to get more tools like the background removal that it has now. It could get SVG generation as well as basic editing tools like flip and reverse. Many more tools from open source would be valuable, like inpaint sketching (drawing a simple version of what you want). A more controllable way to do outpainting would be very welcome. And finally there’s latency; when images take only a second or two to generate, whole other use cases open up.

Image generation has never been easier to get incredible results. With some trial and error, you can create or modify anything you can imagine. There’s never been a better time to get into the hobby. What will you create?